DTR (Data Transfer Reloaded)¶

This page describes the data staging framework for ARC, code-named DTR (Data Transfer Reloaded).

Overview¶

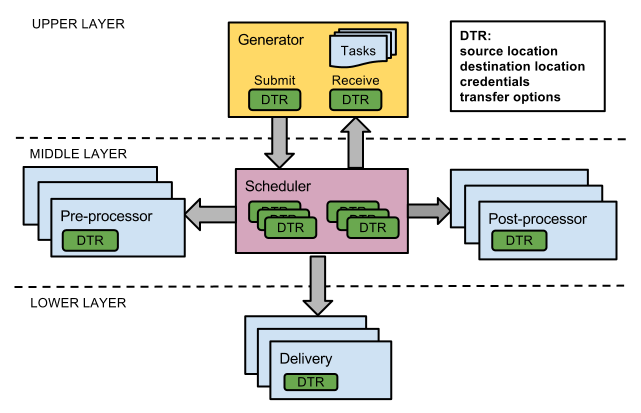

ARC’s Computing Element (A-REX) performs the task of data transfer for jobs before and after the jobs run. The data staging framework is called DTR (Data Transfer Reloaded) and uses a three-layer architecture, shown in the figure below:

The Generator uses user input of tasks to construct a Data Transfer Request (also DTR) per file that needs to be transferred. These DTRs are sent to the Scheduler for processing. The Scheduler sends DTRs to the Pre-processor for anything that needs to be done up until the physical transfer takes place (e.g. cache check, resolve replicas) and then to Delivery for the transfer itself. Once the transfer has finished the Post-processor handles any post-transfer operations (e.g. register replicas, release requests). The number of slots available for each component is limited, so the Scheduler controls queues and decides when to allocate slots to specific DTRs, based on the prioritisation algorithm implemented. See Data_Staging/Prioritizing for more information.

This layered architecture allows any implementation of a particular component to be easily substituted for another, for example a GUI with which users can enter DTRs (Generator) or an external point-to-point file transfer service (Delivery).

Implementation¶

The middle and lower layers of the architecture (Scheduler, Processor and Delivery) are implemented as a separate library libarcdatastaging (in src/libs/data-staging in the ARC source tree). This library is included in the nordugrid-arc common libraries package. It depends on some other common ARC libraries and the DMC modules (which enable various data access protocols and are included in nordugrid-arc-plugins-* packages) but is independent of other components such as A-REX or ARC clients. A simple Generator is included in this library for testing purposes. A Generator for A-REX is implemented in src/services/a-rex/grid-manager/jobs/DTRGenerator.(h|cpp), which performs the task of the down and uploaders - turning job descriptions into data transfer requests.

Using DTR in A-REX¶

Data staging is enabled by default and is configured using the [data-staging] block.

All configuration parameters in this block are optional and have sensible defaults (see below) but

can be tuned to a particular site’s requirements and also enable multi-host data

staging.

The multi-host parameters are explained in more detail in Data_Staging/Multi-host

Example:

[data-staging]maxdelivery=10maxprocessor=20maxemergency=2maxprepared=50sharepolicy=voms:rolesharepriority=myvo:production 80deliveryservice=https://spare.host:60003/datadeliveryservicelocaldelivery=yesremotesizelimit=1000000Client-side priorities¶

To specify the priority of jobs on the client side, the “priority” element can be added to an XRSL job description, eg

("priority" = "80")

For a full explanation of how priorities work see Data_Staging/Prioritizing.

gm-jobs -s¶

The command “gm-jobs -s” to show transfer shares information now shows the same information at the per-file level rather than per-job. The number in “Preparing” are the number of DTRs in TRANSFERRING state, i.e. doing physical transfer. Other DTR states count towards the “Pending” files. For example:

Preparing/Pending files Transfer shareAs before, per-job logging information is in the /job.id.errors files.

Supported Protocols¶

The following access and transfer protocols are supported. Note that third-party transfer is not supported.

- file

- HTTP(s/g)

- GridFTP

- SRM

- Xrootd

- RFIO/DCAP/LFC (through GFAL2 plugins)

- S3

- Rucio

- ACIX

- LDAP

Multi-host Data Staging¶

To increase overall bandwidth, multiple hosts can be used to perform the physical transfers. See Data_Staging/Multi-host for details.

Monitoring¶

In A-REX the state, priority and share of all DTRs is logged to the file /dtrstate.log periodically (every second). This is then used by the Gangliarc framework to show data staging information as ganglia metrics. See Data_Staging/Monitoring for more information.

Using DTR in third-party applications¶

See the Data staging section of the SDK documentation for API reference and examples.

Advantages¶

DTR offers many advantages, including:

- High performance - When a transfer finishes in Delivery, there is always another prepared and ready, so the network is always fully used. A file stuck in a pre-processing step does not block others preparing or affect any physical transfers running or queued. Cached files are processed instantly rather than waiting behind those needing transferred. Bulk calls are implemented for some operations and protocols.

- Fast - All state is held in memory, which enables extremely fast queue processing. The system knows which files are writing to cache and so does not need to constantly poll the file system for lock files.

- Clean - When a DTR is cancelled mid-transfer, the destination file is deleted and all resources such as SRM pins and cache locks are cleaned up before returning the DTR to the Generator. On A-REX shutdown all DTRs can be cleanly cancelled in this way.

- Fault tolerance - The state of the system is frequently dumped to a file, so in the event of crash or power cut, this file can be read to recover the state of ongoing transfers. Transfers stopped mid-way are automatically restarted after cleaning up the half-finished attempt.

- Intelligence - Error handling has vastly improved so that temporary errors caused by network glitches, timeouts, busy remote services etc are retried transparently.

- Prioritisation - Both the server admins and users have control over which data transfers have which priority.

- Monitoring - Admins can see at a glance the state of the system and using a standard framework like Ganglia means admins can monitor ARC in the same way as the rest of their system.

- Scaleable - An arbitrary number of extra hosts can be easily added to the system to scale up the bandwidth available. The system has been tested with up to tens of thousands of concurrent DTRs.

- Configurable - The system can run with no configuration changes, or many detailed options can be tweaked.

- Generic flexible framework - The framework is not specific to ARC’s Computing Element (A-REX) and can be used by any generic data transfer application.